Demands for bandwidth-intensive, data-driven services are fueling a rise in compute, data storage, and networking capabilities. These rises put pressure on connectors and cables to deliver data at higher speeds with better signal quality and less heat.

Whether it’s 5G implementations, our growing reliance on AI and machine learning, or the soaring impact of the Internet of Things, data centers must constantly adapt. To keep pace, companies are moving from monolithic data center designs to distributed, disaggregated architectures.

This modularized approach avoids the extensive investment of traditional server or storage build-outs. Instead, mix-and-match components are used to upgrade capabilities in a piecemeal fashion—cutting the time, effort, and expense to deploy new hardware.

The effectiveness of this approach depends, however, upon meeting networking and compute connectivity requirements. Specifically, there are four keys for optimization in the data center via modularization: space constraints, signal integrity, cabling, and thermal management.

Space constraints

Two trends have converged in recent years to put a squeeze on data centers. The first is shrinking square footage. To minimize their carbon footprint and lower energy bills, property costs, and site maintenance, companies for the past decade have been building smaller data centers.

The second is hardware density. Despite greater use of the cloud and virtualization, more hardware is being crammed into data centers than ever. In a 2020 survey by AFCOM, 68% of respondents said rack density has increased over the past three years, and 26% said the increase was significant.

Some of this is due to the growth of AI, which requires the use of more specialized chips, but it is also part of a broader trend of disaggregation and modularization. Disaggregation takes the traditional all-in-one server and decomposes it into separate resources, such as separate hardware for compute, memory, storage, and networking. Disaggregation also takes the form of edge computing, where computing power moves away from the cloud, getting closer to the user.

In many cases, these resources remain within the same rack unit, but are broken out onto separate modules. While this approach retains the same basic architecture as a monolithic design, it complicates connectivity. Components that once lived on the same PCB now reside on different modules. As such, they can no longer communicate via PCB traces. Instead, modules must communicate via connectors and cables.

This raises the question of how to fit more parts — i.e., more connectors and cables — into less space. To deal with this concern, several new types of connectors have been developed.

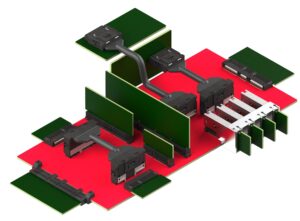

Mezzanine connectors, which let two PCBs couple together, are a good example (see Figure 1). These connectors help when adding accelerators to CPU cards. In fact, the Open Compute Project’s Open Accelerator Infrastructure (OAI) group has developed specifications for an open-hardware compute accelerator module form factor and its interconnects. Mezzanine connectors based on the OAI Universal Baseboard (UBB) Design Specification v1.5 112G can achieve twice the bandwidth of the prior generation while maintaining an extremely small footprint.

Edge cards offer a similar option, letting you add memory, storage, and accessory cards to a system in a highly space-efficient manner (Figure 2). As with mezzanine connectors, edge cards are widely available in standardized configurations recognized by the Small Form Factor Committee, JEDEC, Open Compute Project and Gen-Z Consortium. This enables large-volume purchases for a variety of applications, aiding not only space efficiency but also cost efficiency.

Signal integrity

Just as disaggregation complicates volumetric efficiency, it also introduces new concerns around signal integrity. This was already an area of concern as PCBs are currently managing 5 GHz signals at best — and new functionality requirements for applications like 5G are driving signals of 25 GHz and higher.

Modularization only adds to the challenge by increasing the number of components that must communicate relatively long distances over lossy PCB traces. Consequently, high-speed, high-density applications are moving away from traditional board designs to allow for intra-module cable connections.

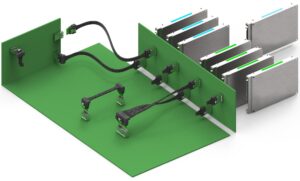

New PCIe connectors can create a direct, height-optimized connection from anywhere in the system to near an ASIC. That lets signals bypass board traces and systems can use lower loss materials. The result is not only more space-efficient wiring, but better signal integrity, lower insertion loss, and reduced latency.

The latest PCIe gen 4 and 5 cabling and connector systems can provide high bandwidth in server and storage applications—up to 32 Gb/sec (Figure 3). Some of these connectors are also ideal for serial-attached SCSI (SAS) protocols. Not only do they support current SAS-3 standards, but they are future-proofed for SAS-4 (24 Gb/sec). Use of this new generation of connectors should help solve data center space issues and boost performance in download systems well into the future.

Increasing data rates also create complications for front panels. Here again, a new generation of interconnect systems plays a key role. These cable and connector assemblies can deliver 400 Gb/sec per port with outstanding signal integrity—all in a widely accepted, high-density pluggable form factor. The result is minimized tray and panel space coupled with maximized performance.

Cabling

Thus far we have focused on the issues within a given rack unit. Modularization means that in many cases, functions that were once housed within a single server are now spread across multiple boxes, creating a greater need for cabling to connect subsystems. Deciding which type of cable to use requires balancing individual data center needs with cost and energy considerations.

Passive cables, including Direct attach copper cable (DACs), have been a standard in rack architecture for decades. With transmissions of 56 Gb/sec PAM4, DACs can connect the top-of-rack (TOR) switch to all the servers on the rack within a space of up to 2.0 m to 3.0 m without excessive signal loss. For data centers operating at these lower frequencies, they are a good option that saves on energy and cable costs.

As data centers move up to 112 Gb/sec PAM4, DACs used in distances beyond 2.0 m can experience unacceptable losses. They may become unable to transmit a clear signal long enough to connect the Tor with servers located lower on the rack, leading data center managers to consider other alternatives.

Active electrical cables (AECs) provide a middle-ground option that effectively span lengths of up to 7.0 m for plug-and-play upgrades. As a result, data centers are installing AECs, which include re-timers at each end. They clean and recondition the signal, amplifying it and removing the noise as it comes in and again as it exits, providing fast transmission and near-zero data loss throughout the journey.

Though AECs do use power, their small diameter helps improve airflow from the front through the back of servers — an important benefit for thermal management. It also makes installing cable bundles easier and faster.

For high-performance computing and longer reach, you can’t beat active fiber optic cable (AOC), which uses electrical-to-optical conversion on the cable ends to improve speed and distance performance while remaining compatible with other common electrical interfaces.

With a transmission reach of 100 m to 300 m, AOCs can link switches, servers, and storage between different racks inside the data center, or even for data center-to-data center connections. DACs may still connect switches, servers, and storage within racks.

AOC’s lightness — it weighs just a quarter of a copper wire DAC and has half the bulk — lets it dissipate heat better. Because it can’t conduct electric current, it isn’t vulnerable to electromagnetic interference.

Of course, AOC is not a universal, one-size-fits-all cable technology. Different configurations can peacefully co-exist, giving data centers the flexibility to adapt as technology change accelerates.

Thermal management

The increases in hardware density, data throughput, and cabling complexity are all contributing to difficulties in thermal management. Several cooling techniques have been used over the past decades, ranging from advanced heat sinks to full immersion liquid cooling. Each system has its advantages and challenges.

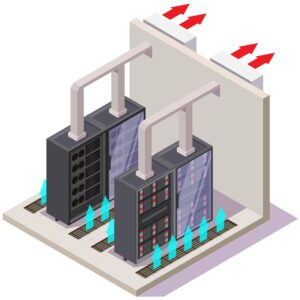

Fans are the oldest and simplest cooling method, sitting in the back of an equipment enclosure and pushing or pulling air through and away from it (Figure 4). Unfortunately, as engineers pack ever more computing functions into chips, these chips require larger and larger heat sinks. Given the space constraints of the data center, cooling with fans alone is becoming an increasingly untenable option.

A liquid cooling system may be installed to replace fans or used in combination with them. In this system, a coolant liquid is piped in. As the liquid travels along the pipe at the base of components, it gathers some of the heat being generated and carries it to a heat exchanger, a metal cold plate that replaces the heat sink of a fan. The heat exchanger transfers the heat out of the system and the liquid repeats its cooling circuit.

Conductive liquid cooling is more efficient than fans, but it is complicated to install and difficult to maintain.

In immersion cooling, an entire device or machine is submerged into a bath of non-conductive, cooled liquid, which transfers heat away from it without requiring fans. Immersion is by far the most effective method of carrying heat away from equipment. Because it is so efficient, it can lower the data center’s carbon footprint by an estimated 15% to 30%. In the current environment of high energy costs and ambitious sustainability goals, immersion cooling is gaining popularity. Managing it is, however, extremely complex, and this has limited the scope of its adoption.

Bring the four keys into harmony

While we have discussed space constraints, signal integrity, cabling, and thermal management as largely separate topics, these four keys to data center optimization are of course tightly interrelated. For example, tight space constraints and increased cabling both have the potential to restrict airflow, which makes thermal management more challenging.

Thus, there is an increasing need for careful consideration of these interdependent factors before a single rack is placed. For example, careful thermal simulations that account for all heat sources and airflow pathways can avoid problems during deployment.

Liz Hardin, Group Product Manager for the Datacom & Specialty Solutions Division at Molex, works with a broad range of customers to help creatively solve connection challenges. With the understanding that ever-increasing speeds of signaling and costs to deploy new systems are continually increasing trials, Liz believes that working together as an ecosystem to find the best connectivity path forward is essential. A 17-year veteran of the interconnect industry, Liz has a strong customer focus and is excited to work to bridge the gap between the system needs of today and the opportunities next generation requirements hope to offer.

Liz Hardin, Group Product Manager for the Datacom & Specialty Solutions Division at Molex, works with a broad range of customers to help creatively solve connection challenges. With the understanding that ever-increasing speeds of signaling and costs to deploy new systems are continually increasing trials, Liz believes that working together as an ecosystem to find the best connectivity path forward is essential. A 17-year veteran of the interconnect industry, Liz has a strong customer focus and is excited to work to bridge the gap between the system needs of today and the opportunities next generation requirements hope to offer.

Leave a Reply

You must be logged in to post a comment.