Passive and active direct liquid cooling for modules and chassis immersion implementations are becoming more commonly used to cool high-wattage devices — such as graphics processing units (GPUs) and switch application-specific integrated circuits (ASICs).

This seems to be driving an expanding fluid connector and cable market with higher-volume units as applications now include hyperscaler and HPC datacenter systems, prosumer PCs, and engineering workstations.

However, for enterprise data-center systems, liquid cooling systems are moderate but still included on some roadmaps. For example, Intel’s recently announced $700 million lab facility in Hillsboro, Oregon, is focused on developing new cooling systems. It will likely require industry-specified and standardized fluid connectors and cables, which might be done via the Open Compute Project Foundation (OCP).

Whether internal, inside-the-module, liquid connectors and cables will become standardized or stay application-specific with custom designs remains in question. This is true for both the data-center and prosumer market segments.

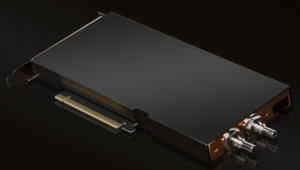

A certain amount of external standard liquid connectors and cables will likely evolve in the current growth market. Though this will be dependent on how many different form factors are used. Nvidia’s latest A100 product is a new PCIe form-factor, add-in GPU module for data-center applications with liquid in-port and out-port connectors on the rear plate.

Another new product is ILLEGEAR’s external water-cooling module, FLOW, which has a new self-locking, quick-release external connector and cable assembly. It supports a temperature decrease between 15° to 20° C on the CPU and GPU chips inside laptops.

FLOW uses a unique two-port fluid connector/hose system that’s quite different from other liquid-cooled products.

Here’s a look at the description and image:

Often internal liquid-cooling cables are of larger diameters with larger connectors. Here’s a look at Nvidia’s GPU accelerator, which shows white liquid hoses with black copper IO cables in the first image. They’re routed together in free air in both images.

Data-center external quick-disconnect liquid cooling connectors are typically marked with blue for the cool input port and orange or red for warm output port, much like this SuperMicro example below.

Several new high-end copper and optical connectors and cables will need to be vetted for data-center reliability and performance, especially while fully immersed in inert coolant liquids. This could potentially affect some form factors such as EDSFF, PECL, Ruler, M2, M3, and others.

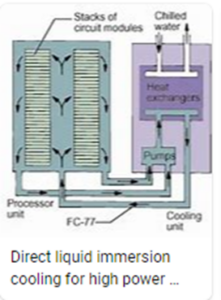

Here are a couple of liquid immersion cooling examples for high-power devices.

This is what full immersion can look like.

Observations

Aside from solving many heating and cooling problems, liquid-cooling infrastructures significantly reduce the need for high-cost chillers and related water use (and cost). They also eliminate the necessity of costly and noisy fans.

What’s more: liquid cooling saves at least 30% in electrical costs compared to air-cooled products. Liquid cooling can also save up to 50% in the necessary slot space per high-wattage module. Now there’s only one PCIe slot instead of two like with older GPU products.

Will we see many new application-specific liquid connectors as this market develops over the next year or two? Will there be newer applications? There seems to be a high level of development going on here. Older types of liquid cooling interconnects often used the terms of hose and fittings rather than liquid connectors and cables. Maybe we’ll be learning some new acronyms soon!

The top connector and cable suppliers are increasing their product offerings to include more sensor, space, and liquid cooling interconnects. It might take several or a few larger companies to standardize these higher volume developments.

Internal custom copper semi-flexible and solid piping are still used in low volume. But high-performance HPC systems with large and high-powered wattage CPU chips and newer IPU types are on the rise. These sometimes require copper fittings and welded connections, which do get costly when used in volume.

It seems that distributed-as-needed liquid cooling topology and infrastructure that recycles targeted small amounts of coolant provides the ideal costs and fastest install/servicing turnaround times.

Leave a Reply

You must be logged in to post a comment.