Ethernet’s Alliance Technical Exploration Forum was a very successful online video conference with an excellent question and answer sections. This was a 150+ page 10 hour rich experience meet up with perspectives of different industry leaders.

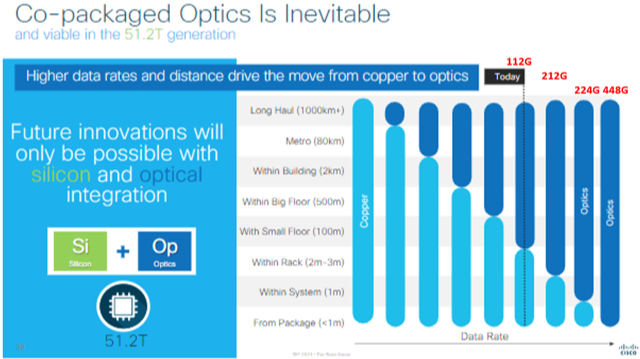

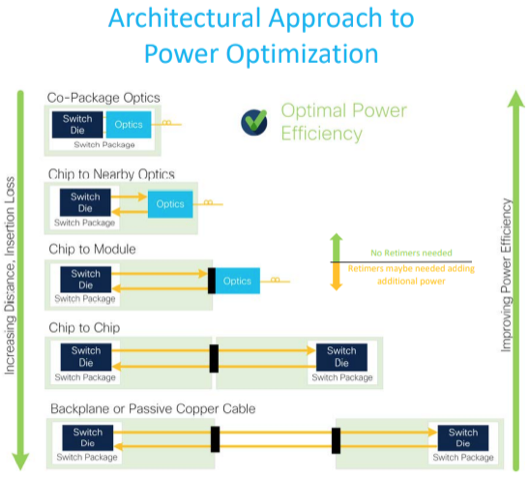

A key consensus takeaway was: due to currently unattainable SerDes power & cooling practical limits, Co-Packaged Optical interconnects will likely be needed for 224G and 448G per lane signaling rates for inside the box to bulkhead IO port links. Many technology and product developers are co-developing copper and optical solutions for inside-the-box 212G and 224G links. Several very large companies seem to be primarily focused on just optical interconnects.

Key trends

Many installed datacenters and campus networks have fully harnessed their locale’s capabilities and cannot provide any more power and water, let alone much larger amounts of power. This is a very high volume server versus feasible power grid challenge. There has been a trend to use Regional Datacenter network topologies and fabrics that employ switched radix topologies. These topologies drive the need for many more Coherent interconnect links because every switch is connected to every other regional switch. The new targeted switch radix fabric is 128 links.

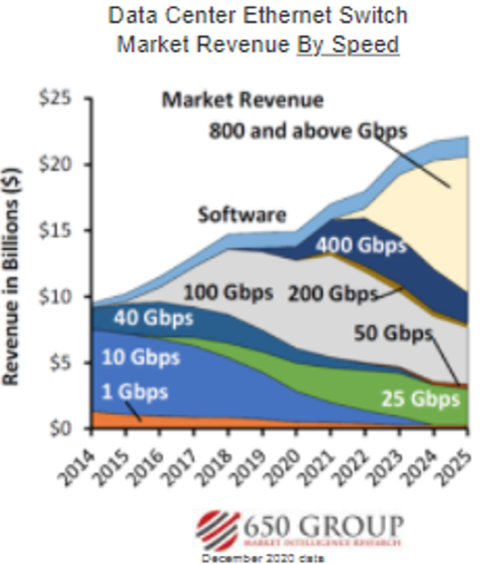

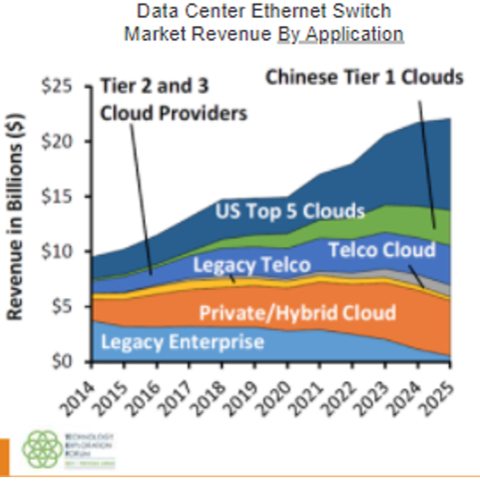

Enterprise and HPC market segments and leadership drove the Ethernet 100G Link PHY specification and implementation. Carriers drove 400G spec, and now Hyperscalers are driving new 800G and 1.6T PHY specs for their Mobile AV, 5G, IIoT, Cloud Systems, and other new market segment applications rollouts. Here’s a 650 Group forecast chart showing market revenue by application and high growth volume shipments of 800G & 1.6T product revenue versus established 100G, 400G pluggable types.

Cloud equipment developers are driving the new DC interconnect products. Forecasting indicates 10G, 40G, 50G, and 200G port usage is shrinking fast over the next few years with 100G, and 400G sustained usage. Growth is with 800G, and 1.6T interconnects and ports starting 2022.

Some positive forecasting – cloud market drivers include AI, XR, VR, AR, and video conferencing with new meeting programs like Zoom, etc. Enterprise market forecasted consumption of 25G pluggable interconnects seems to be healthy per 650 Group.

Some early decisions need to be made, like what modulation would be best to use, PAM5, PAM6, PAM8, or 224G Dual Duplex over a single lane? Should the Industry do 800G, 1.6T, and 3.2T link specifications concurrently? We learned that 224G PAM6 achievable per Broadcom’s Cathy Liu, a presenter, but with some higher power consumption likely.

Problems

It took more than 4 years to develop and release the Ethernet IEEE802.3ck 106G per lane electrical spec. Will it take much more time than 5 years to create the next 212G spec? Or will one or two optical technologies have breakthrough performance in power, cost, size, and time to market. The blue font added comments are what I learned at the recent forum.

The probable power consumption curve issue is the main problem making it less likely pluggable, and copper interconnects have much use for 224G per lane links. However, 106/112G pluggable interconnect types are likely to maintain continued growth in many new market segments and products. The red font markups below are from what I learned at this forum.

Some Hyperscaler companies evaluate CoPO interconnects at 112G per lane rate to better compete on the power consumption cost factor. See Cisco chart.

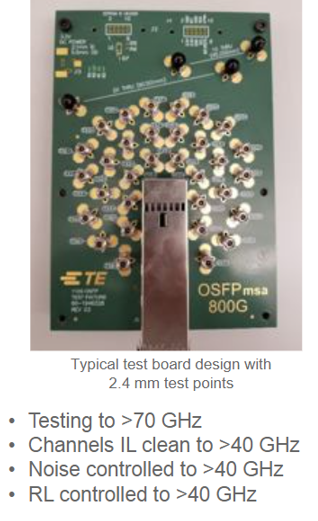

There is a major serious SI problem of measuring 224G signaling and how long it will take to get it working well and complete. It likely will take longer than the last major wave of 400G development that took 4 years, so maybe 5-6 years for 224G?? Test equipment manufacturers are very challenged to maintain any worthy parameter margins with current technology. Heard that the 800G test chip may require a steady 3 pico-second engine. So it will take a lot more design experiments, methodologies, and fixture development and a much higher level of interdependence and feedback with their customer users. Getting timely industry agreement on how to specify the new data may be the bigger challenge. Here is a photo of the complex OSFP msa 800G test board by TE.

Solutions

New Coherent optical technology is being driven down, and direct detective technology is being driven up. They are now competing in some newer topologies and reaches. What will this chart look like next year and after? See the Huawei chart.

Coherent transceiver technology has been optimized for shorter and longer switch radix links. Hollow fiber is increasingly used for lower latency optical connections used by Fintech DC users. Product developers need to analyze the Cisco chart below to determine what topologies and devices they can best develop and achieve time to market, maybe the first wave?

Some observations

It appears that active copper re–timer chips will be needed to move 212G per lane signals across a small PCB and maybe another chip embedded in an Active Copper DAC QSFP plug cable assembly. This addition to chip power consumption and the component cost is seen more unacceptable by leading-edge DC developers. Thus the Transceiver demand is moving to utilize Silicon Photonics, and market forecasting is a CAGR of 46%. See Yole Development chart.

I recommend visiting the Ethernet Alliance website and downloading the TEF various presentations for a more complete & detailed review.

It seems networks will be collapsed again with the trend of not using ToR switches or 4-5 hop topologies. So less need for passive or active copper DACs going ahead. With the increased use of CoPO interconnects, less use of AOCs may be a trend by 2022-2023? We could expect the rise of hybrid and full optical circuit boards and midplanes. Will there be a lot of new glass or polymer waveguide boards or woven fiber circuit boards?

It seems that adoption for CoPackaged Optical modules will hasten the reduced use of the established Pluggable interconnects for 224G and beyond. Systems and standards are already planning to use Optical Chiplet devices with ribbon optical internal cables connecting to the bulkhead. These cables are using the newer and smaller CS, SN, MDC connectors more than the older larger LC and MPO types.

Leave a Reply

You must be logged in to post a comment.