By Ed Cady, contributing editor

Peripheral component interconnect express, or PCIe, has become the interface standard for connecting high-speed components, such as graphics, memory, and storage.

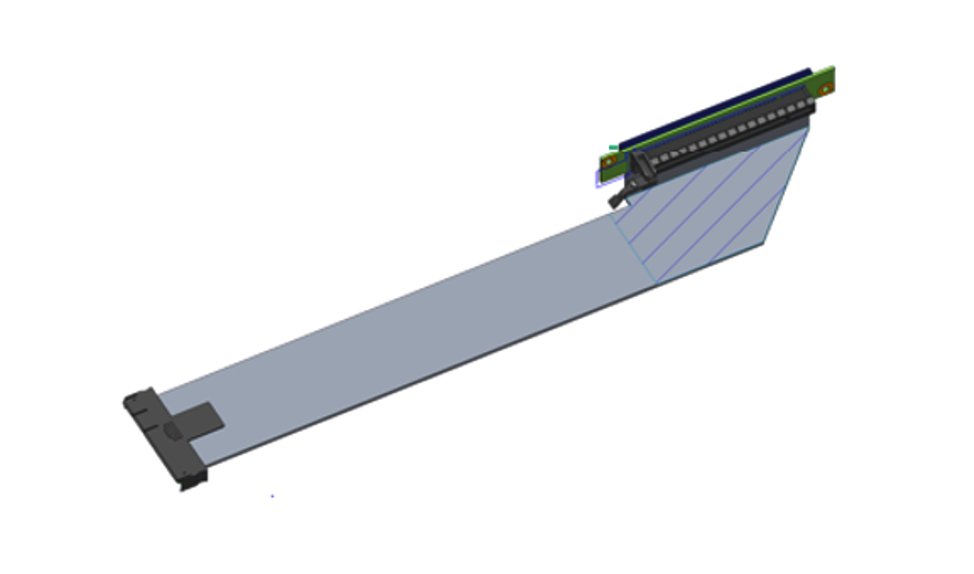

Typically, PCIe interface signaling and physical layer implementation rely on printed circuit board assembly (PCBAs) and edge connectors. Several standardized and non-standardized cabling solutions are available that support a variety of applications and related topologies.

Older interface standards (such as ISA, EISA, PCI, or PCI-X) often made use of internal flat cables and edge connectors from previous generations.

Several of the early applications included internally cabled power distribution options, usually combined within the IO ribbon cable legs.

Now, next-generation PCIe CEM edge connectors are available, which offer higher-speed performance. Newer standards, such as the CXL 1.0, also use the latest PCIe CEM connector. The latest internal cables, such as the PCIe 6.0 and PCIe 7.0 applications, are expected to hit the market by or before 2022.

The earliest PCIe 1.0 applications included production testbeds for motherboards, add-in boards, server chassis, and backplane extenders. The wire termination was becoming increasingly important.

Features

(click to enlarge text below)

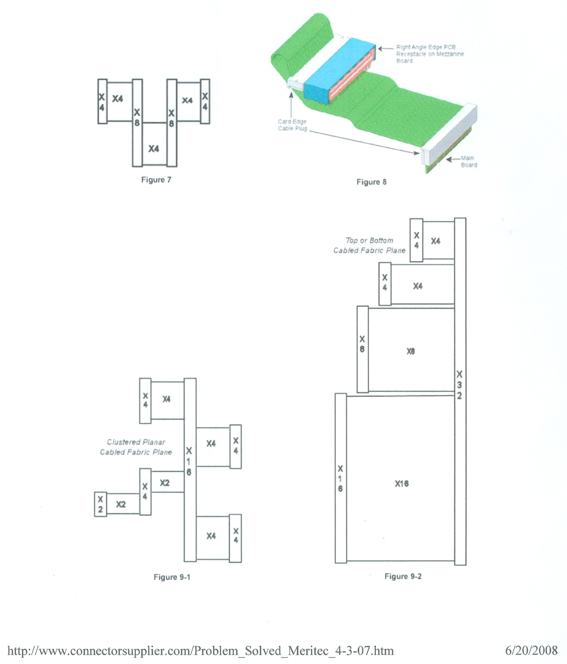

The PCIe 2.0 applications included several embedded computer planar cables for the cPCI and ATCA interconnects and various form-factors and topologies. Any of the well-shielded designs typically supported external flat and round full-bus, twin-axial cable applications.

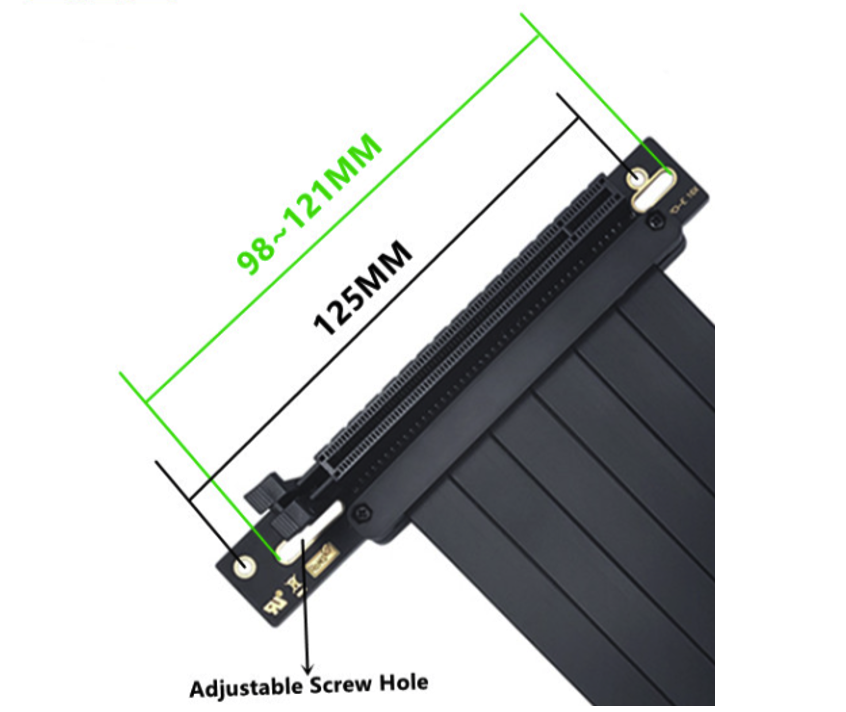

The PCIe 3.0 internal-cabling solutions brought several choices with additional applications, beyond embedded bus extenders and test adapters. These devices offered better crosstalk control and interconnect options.

For example, internal flat-foil shielded, twin-axial cables meant advanced application interconnect solutions that were usually set within server and storage boxes. Application-specific foldable solutions also became an option, which extended to the PCIe 4.0 inside-the-box applications.

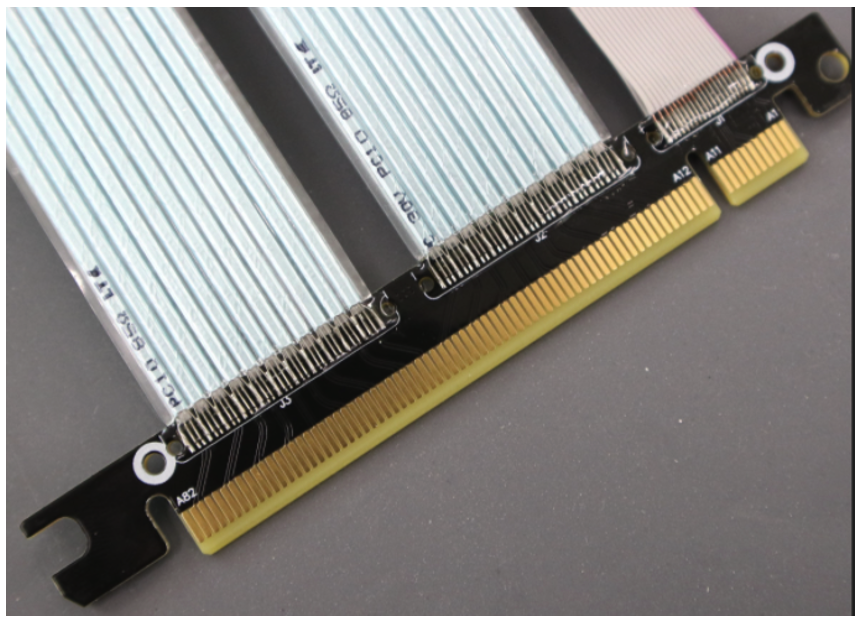

It’s worth noting that the flat-foil shielded cables are still used for many 16GTs, 25Gbps, and 32GTs for NRZ per-lane applications.

It did not take long to realize that most of the tightly bent foil, shielded, twin-axial cable assemblies failed to meet performance requirements, and particularly at the at 56G PAM4 or 112G PAM4 per lane or higher speed rates. This was because of the link budget limitation at each cable crease or fold (which consumed .5dB or more).

The straight, internal cable assemblies almost always performed better.

The PCIe 4.0 facilitated better and faster automated optical inspection for in-line production testing equipment. Manufacturer’s often used clear polymer material in the design, such as silicone.

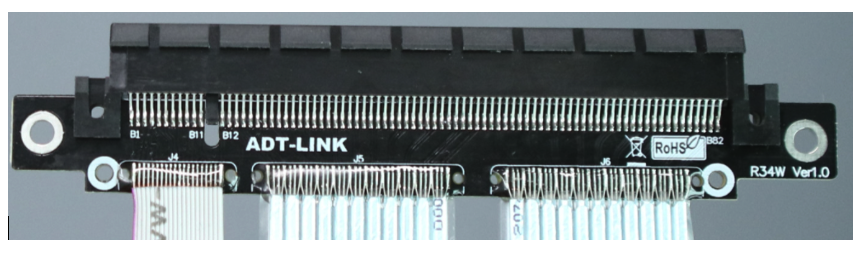

The PCIe 5.0 x16 to GenZ 4C 1.1 connector adapter cable assemblies have advanced to offer several power delivery options, which are compatible with the PCIe CEM r5.0 32GT NRZ per-lane edge connectors.

The five RSVD pins support the flex bus system and 12 and 48V power options are specified as internal cables.

The GenZ SFF TA 1002’s smaller form factor is ideal for reliability at 56G PAM4 or 112G PAM4 per lane.

The PCIe 6.0’s 64GTs PAM4 per-lane specifications are nearly complete and scheduled for release in 2021. Currently, there are many new internal cable assemblies and connector applications and products in development. This includes the PCIe 6.0 CEM x16 connector and several M.2 connector adapter cables and harnesses.

It will be interesting to see if the SFF TA 1002 x32 connector or another type will become the next PCIe 7.0 CEM for the latest PCIe internal cable design standard.

Today’s advances require even smaller form-factor packaging with tighter routing requirements inside the box. The cables and connector must also be able to handle high-temperature interiors without damage or reduced performance. High speed is also a must.

In fact, several internal high-speed IO cable assemblies are now designed for double-generation performance capabilities, such as for 53 and 106G, 56 and 112G, or 128 and 224G.

Demands for 8, 16, and 32-lane link options are also expected to increase, especially for internal pluggable connector cables with an SFF TA 1002 on one end.

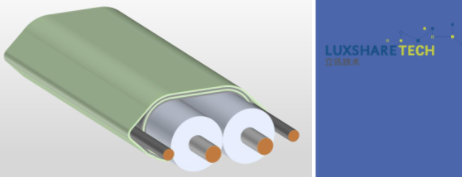

High-performance expectations are being met by the new Twinax flat, raw cable, which supports PCIe 6.0 64GT and potentially PCIe 7.0 128GT, as well as external 56/112G per-lane DAC applications.

Luxshare Tech’s Optimax new Twinax cable has proven capable of accurate and stable SI performance when folded and while in active bending applications. The Twinax cable offers test data results that are exceeding many corporate testing regimes.

The simulation models are extremely close to their physical measurements in real-life performances, through many several testing regimes. This is a special accomplishment as the industry has struggled with faulty 100G signal modeling, stimulation reliability, and real-life performance.

Such raw cable performance allows for tighter routings for inside-the-box cable assemblies and smaller form factors, which are ideal for PECL, EDSFF, OCP NIC, Ruler, and others.

A few features that seem to be making a difference in cable performance, include:

- The use of conductor and dielectric insulation materials

- More symmetric designs

- Stringently controlled tolerances

- Better process control

- Inline SI full testing

- Active optical inspection and histograms

- Multiple testing regimes per application sets (using Telcordia, TIA/EIA, ISO, and Tier 1 user labs)

The Optimax Twinax family set includes:

1. A bending radius down to 2x cable OD with minimal SI degradation

2. Between 33-24 AWG Twinax, with a 26, 30, and 40GHz bandwidth for 112G+

3. A 16, 25, and 32 Gb/s NRZ, as well as a 56, 62, and 112Gb/s PAM4

4. Impedance options, include: 85, 90, 92, 95, 100, and 104 Ohms

5. Several drain and pair counts, as well as a single pair or laminated types

6. Various temperature rated raw cable types, such as 85 or 105 degrees Celsius

The PCIe 7.0 128GTs PAM4 per-lane internal cable solutions are likely to include inside-the-box optical interconnect options, such as COBO OBO or different CPO types.

There’s a good chance that Optamax Twinax’s copper internal cable could support the PCIe 7.0 128GTs and 128G PAM4 short reach inside-the-box, including the rack applications. But we’ll have to wait and see.

A few observations…

The use of higher speed signaling and wider (16 and 32-lane IO PHY) interfaces will greatly increase the requirement for circuits with greater power and control. Using a smaller footprint, the GenZ internal interconnect system that supports 256-lane interfaces will likely provide an ideal option for the Hyperscaler DataCenter systems, as the PCIe currently only supports 128 lanes.

Successful, higher-volume internal cable manufacturing will also require a faster ramp production line, similar to the consumer high-speed cabling methodologies already largely in place. Quality control is also necessary for high-quality products.

Currently, the CXL accelerator link uses the latest PCIe CEM connector revisions. This CXL link is an internal connector and cabling application. GenZ has an agreement with the CXL Consortium for an external Link interface for the Inter-Rack topologies. But will CXL developers also use the SFF TA 1002 connectors and cables, or other types to achieve PCIe 7.0 128GT per-lane performances?

It will be interesting to find out.

Leave a Reply

You must be logged in to post a comment.