Data-center passive DAC external cables have been standardized and interoperable for decades. However, the mating, inside-the-box cables have yet to be fully standardized.

In general, inside-the-box IO interface interconnections have been a competitive value point for those OEMs and suppliers that offer quality options. Providing reliable connectors and twin-axial cables configured in an ideal layout has been a lofty goal. Several internal cable applications offer proprietary solutions except for certain SAS storage box applications.

Market demands

Advancing data-center technology and market changes mean developers, MSAs, and standards groups are examining the new cable and connector features that will be required. Today’s market is already demanding connectors that can handle:

- Varying impedance ranges

- Higher heat lifecycles

- Long-life liquid circuit cooling

- Robotic IO module insertion/replacements

These characteristics must also fit in several form-factor packaging inside rack boxes.

New IO interface convergence requirements — which integrate information technology with operational technology systems — have meant older cable and connector options no longer suffice in many situations. Updated interface products must include lower profile and higher speed inside-the-box type cable options.

To find the ideal cables and connectors, there’s often a need to rely on multiple suppliers sourcing — and, therefore, inter-compatibility IO assemblies. This is not new for the external connectors and cables but is not a factor with internal ones.

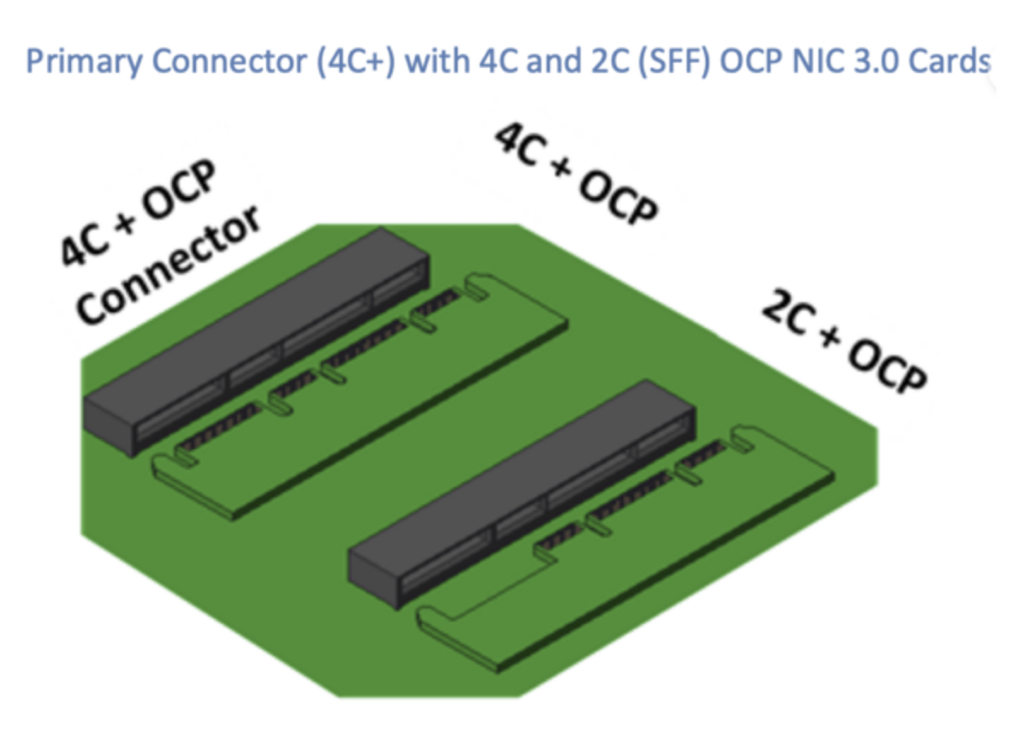

Currently, the Open Compute Project (which shares data-center designs and best practices) OCP NIC 3.0 spec uses the SFF TA 1002/1020 internal cables and connectors for module-to-board connections. They can handle 56G PAM4, 56G NRZ, 112G PAM4, and 124G PAM4 per-lane signaling.

New proposals

There’s a new internal connector and cable proposal for the OCP Server Project, which provides standardized server system specifications for scale computing. Molex, an electronic and connectivity manufacturer, will present this proposal at the October OCP Global Summit 2022 meetings. Other suppliers might also get involved.

The result could be modifications to or all-new developments for the current components and assemblies. Any new developments might result in updated copper and internal optical cables or the use of liquid cooling.

It’s become clear and critical that external and internal copper cables work harmoniously.

This might require internal IO network port faceplate cables and connectors (usually DAC types). Or a few other options include:

- EDSFF SSD array connectors/cabling

- DDR5/6 connectors

- JEDEC45-DDIMM SFF TA 1002/1020

- Accelerator module connector/cablings (like CXL using the CEM and SFF TA 1020)

- NVLink’s latest interconnects

This goal of ensuring external and internal components work better together might result in only one application or implementation for now, with more recommendations at a later date.

It’s also possible that any new standard server connector could be used inside a Switch box if it meets the latest internal Switch box requirements.

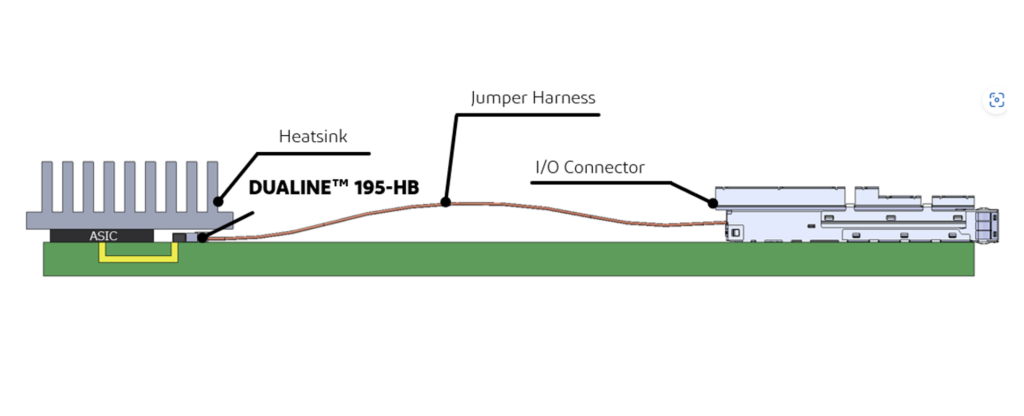

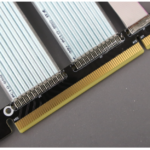

Let’s consider the current internal server faceplate to network chip interconnect implementations. Below is an example of a singular, older generation pluggable receptacle connector and internal cable.

A server can also have one network port and a switch can have as many as 36 ports with internal cables.

Ethernet, InfiniBand, RoCE, FC, NVLink, and Slingshot interfaces’ internal cable links mostly use QSFP, QSFP-DD, and OSFP receptacle cables/connector/cage with proprietary connectors on the other internal cable end.

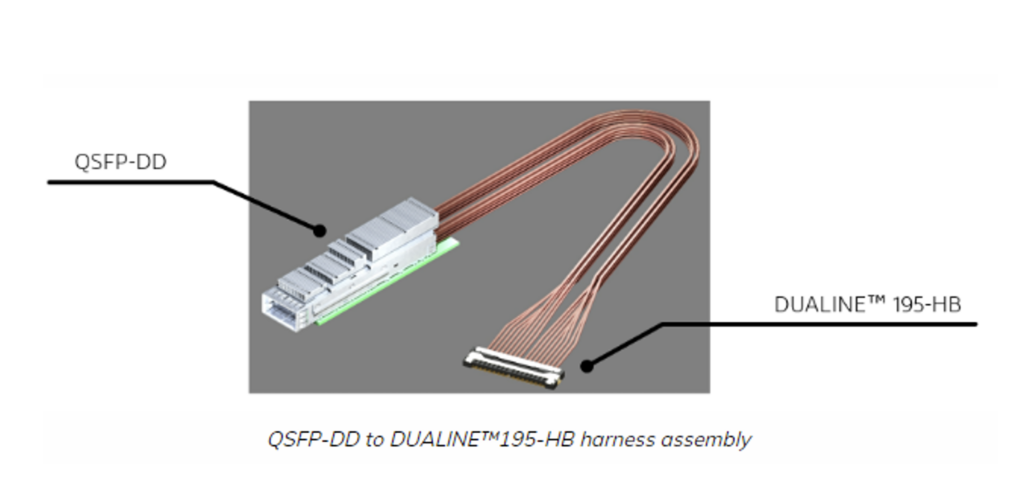

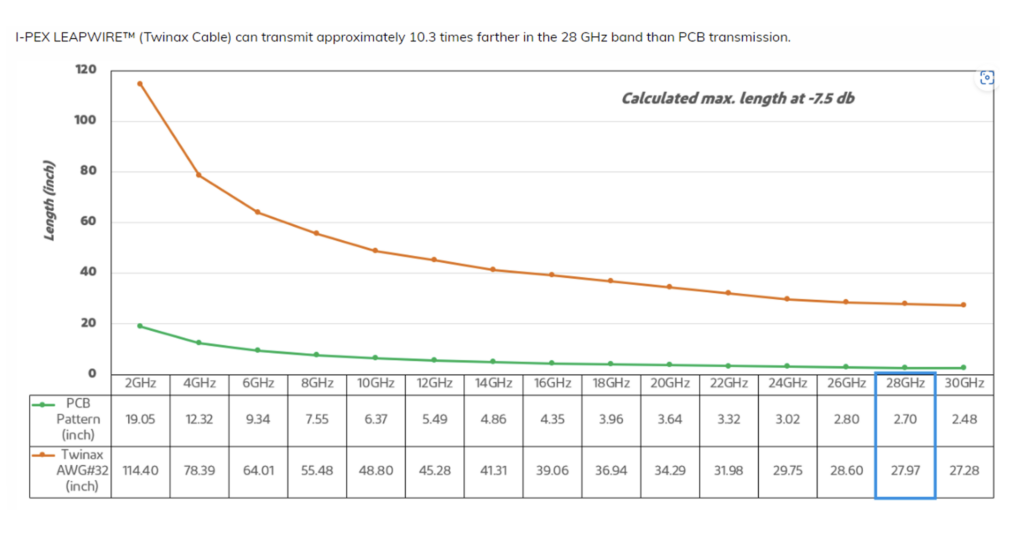

Products running at 100+G per-lane typically run signaling over an inside-the-box, twin-axial cable (instead of the PCB traces).

Pluggable styles

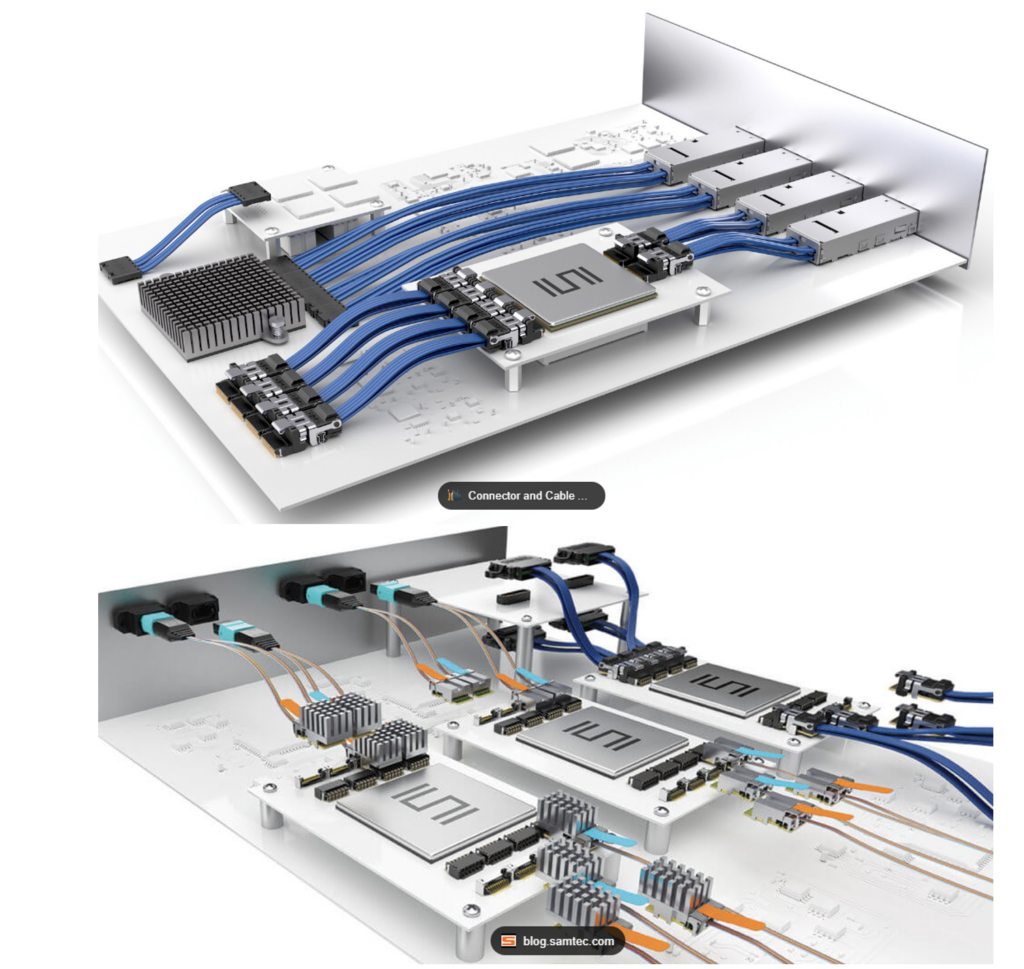

Several IO fabric links are using pluggable, front-faceplate-mounted internal receptacle cables and connectors with a cage and heatsinks. These internal pluggable receptacles/cages allow multi-sourced standard external DAC, ADAC, and AOCs plug connectors, which easily plug into them.

However, most pluggable cages, heatsinks, receptacles, raw twin-axial cable types, and other internal cable assembly connector types — such as low-profile, high-temperature, near-chip connectors — are not interchangeable or inter-compatible unless they’re privately labeled or licensed.

Typically, internal cables and connectors are tuned to 85, 92, or 100-ohm impedance. This depends on the interface spec as they match up with PCIe and other interfaces. Also, the older QSFP28 and newer QSFP112 receptacle connectors usually have significant latency, thermal circuits, and airflow differences.

It would be challenging to standardize at the component and assembly level with the 100+G pluggable receptacles. Granted, it could be done with tight specifications and fine-tuned designs and products.

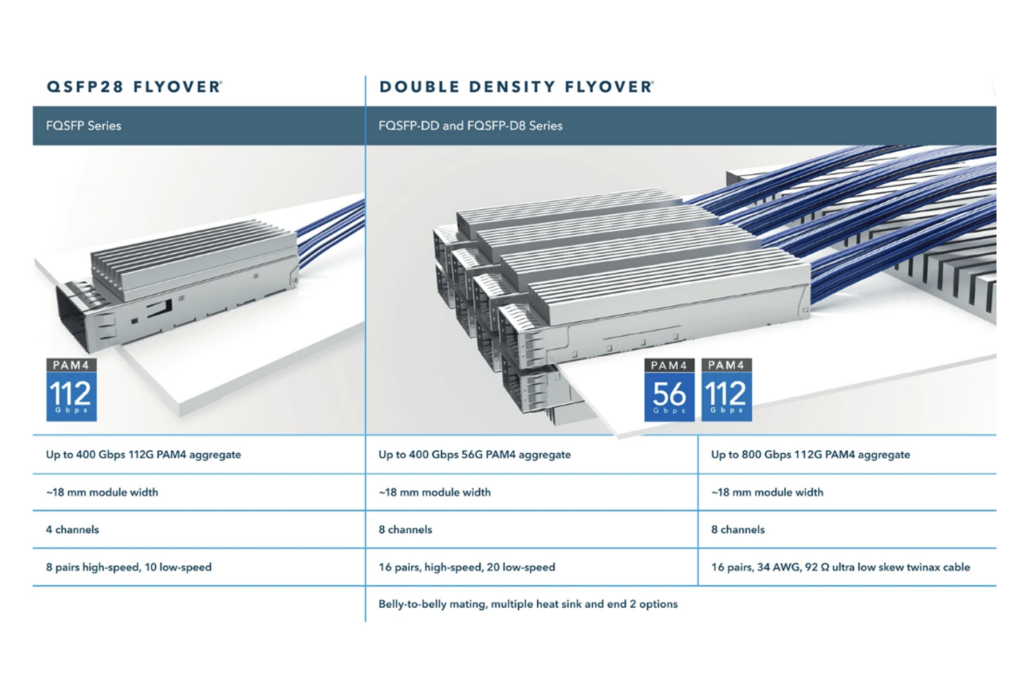

The current internal IO receptacle pluggable styles, like the SFP-DD, QSFP-DD, OSFP, and OSFP-XD specifications, are increasingly being used to support higher-end links. System interfaces often use 8, 16, and 32-lane internal cable links instead of the four lanes. The QSFP112 is one example. Now, this includes an internal fanout-type cable assembly.

These connectors are fastened to the inside of IO faceplates with customized and standardized metal cages. The higher performance generation of these receptacle pluggable styles varies per manufacturer. Feature variations include a heatsink implementation with a proprietary paddleboard or pre-attached copper-wire termination designs and methods. The signal contact design, connector housing, thermal circuit design, thermal rating range, latency, BER, IL, and other performance parameters also vary.

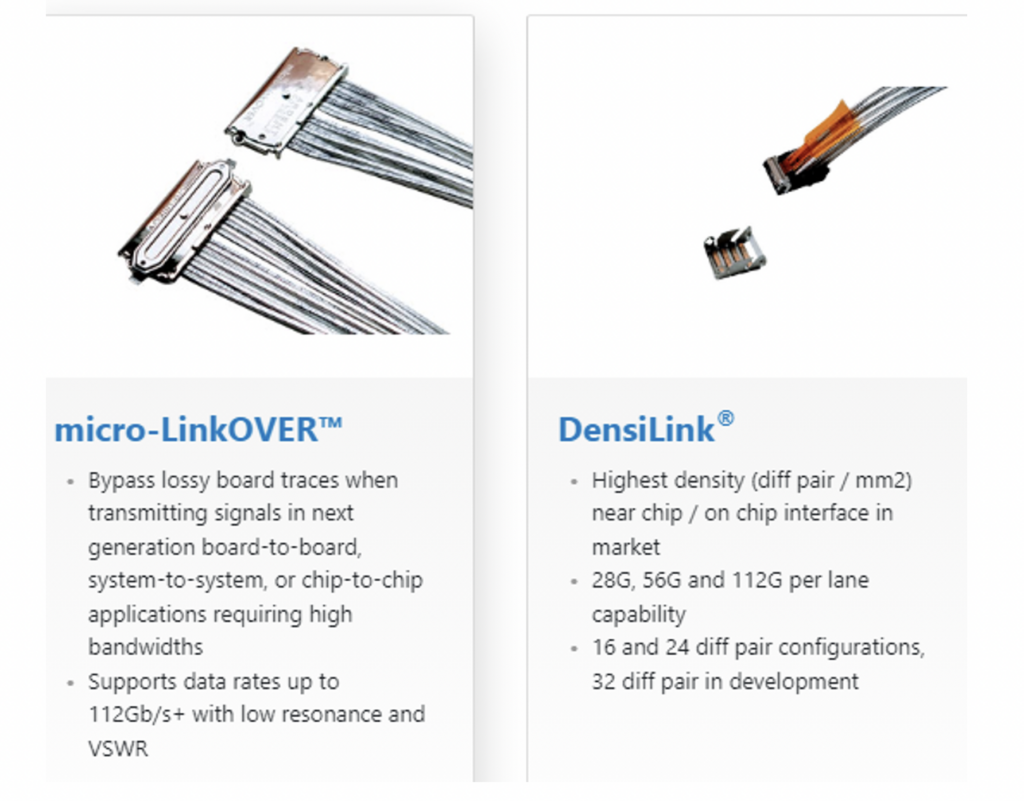

The newest, high-performance twin-axial copper cable designs differ, and only a few suppliers can achieve high-volume production. This limitation makes it difficult to attain internal cable assembly product interoperability with data-speed rates of 100+ and 200+ per-lane signaling.

Chip-side connectors/cables attached near or on the switch are primarily proprietary. It’s a large manufacturing undertaking and CAPEX and OPEX investment to develop 100+G cables and connectors and cables for low-profile and high-heat applications. This is because these products have different lengths, widths, heights, and depths, and varying contact pitch grids and other parameters.

Here’s an example of the DualLine ASIC cable assembly.

Changes

CCIX was an accelerator and open-standard interface consortium driven by Huawei. It officially closed in 2020 and converted into a semi-proprietary interface used by a few OEMs and hyperscalers. The rumor is it plans to use SFF TA 1020 and pluggable-type cabling and connectors for internal links.

It’s likely some members might start using CXL internal cabling, especially given the turnovers.

For example, GenZ, a previous accelerator and open-standard interface consortium, which was driven by HPE and DELL, also shut down. But it’s now merged with CXL. GenZ has contributed key specifications to the CXL consortium. These include the SFF TA 1002 and SFF TA 1020 internal-copper connector and cable assembly family’s specifications.

OpenCAPI, also a previous accelerator and open standard driven by IBM, has recently closed and is merging with CXL.

CXL 4.0 is a widely used open-standard accelerator interface with specifications by Intel that’s clearly winning a large majority of industry interest for design.

This is a major inflection point where older products lose the market share, and newer cable and connector products TAM gain rapid interest. Future CXL implementations should achieve market share and ROI goals for most open connector/cabling standards and semi-custom product sales.

A few older products that might decrease in popularity for future use include U.2 SFF-8537, MCIO, SlimLine, SlimSAS, LowProfile-SlimSAS, MiniSAS, and MiniSAS-HD cabling.

Despite these changes, a large installed base will still support older cable types for a while.

SATA’s internal cabling shipments seem to be supporting the current installed base.

Aside from the new internal copper connector and cabling solutions, competing internal optical cabling solutions like OBOs and some CPOs are also being developed by major developers and users. Many market leaders are investing in copper and optical technologies. They’re comparing which roadmap and VoC feedback will support and have the best product traction.

Suppliers are also wise to invest in both copper and optical solutions. Many market players are supporting and planning for internal optical cables and front-plate assemblies. Others are working on the optical external and internal interconnections. It’s best to be prepared.

Questions

Would a potential new OCP Server spec include internal pluggable receptacle connectors? Does the market need to select another connector family or modify existing ones? What are your thoughts?

Keep an eye on the new OCP and CXL connector developments and what connectors are specified on IEEE-802.3df and InfiniBand XDR specs.

Leave a Reply

You must be logged in to post a comment.