Persistent memory has become one key technology for the cloud as speed, efficiency, and volume at data centers advance. In high-performance computing, the cloud often requires data to be moved between multiple physical systems. Data may also be replicated for redundancy and protection, or it can be split across physical systems for performance.

High-performance networking fabrics provide the interconnect between these systems. The interconnect switch fabric refers to the basic topology of how the network is designed. Aside from persistent memory, it also relies on certain essentials: such as copper cable to pass the electrical signals between networks.

As persistent memory switch fabric interconnect continues to improve, it offers the potential to support several new cloud and hybrid storage applications. This is good news. But, at the same time, the current trends and use of copper interconnects must be considered and they’re going through a major market inflection point.

The cables

Both die-attach cables (DACs) and active optical cables (AOC) have been widely used in high-performance computing network cabling systems, thanks to the advantages of lower latency, power, and costs.

DAC cables are classified as passive or active. Both the passive and active types can transmit electrical signals directly over copper cables. However, the use of external passive DACs has been decreasing rapidly, with fewer length options and cables available. This is particularly true per rack (at 56/112G) per-lane links.

DACs are also no longer used for top-of-rack (ToR) or end-of-row (EoR) links in network architecture design. There have also been fewer ToR switch-to-leaf server links. Leaf switches are devices used to aggregate traffic from the server, connecting it to the network.

As network technology advances with greater speed and capabilities, expect more external DACs to use the middle-of-rack (MoR), switch-to-leaf links.

Market trends

What was once an average of 24 DACs per rack at 25G per lane has since changed to 6 DACs per rack at 56G per lane. However, to efficiently achieve 6 DACs per rack at 112G, the use of passive copper cable is unlikely to cut it. This is where active copper DACs offer greater potential.

To achieve useful link reaches, external cable plugs are also now embedded with new active copper chips (such as Spectra-7’s GC1122). In fact, active copper DACs are expected to achieve 50 percent of the total addressable market (TAM) in the next year or two. There are also some internal cable applications using active copper link extender chips.

To keep revenues growing, interconnect suppliers would be wise to push the internal copper cabling business. Internal copper cables are rapidly increasing in units used per box at 24-plus, up to 250 units per rack for new box types.

The development of even higher-speed data transfer protocols — such as the internal 128G per-lane Fibre Channel, OIF 224G, or even higher-speed test equipment per lane — means cable and connector suppliers will have to meet evolving demands. It will be interesting to watch the market trends, including how suppliers continue to adapt and advance.

Evolving applications

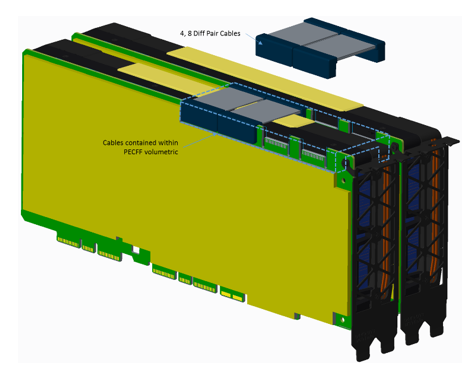

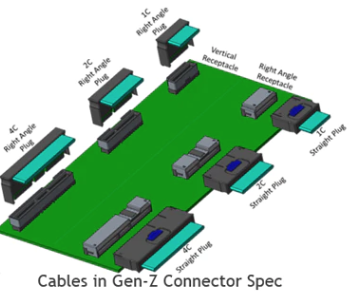

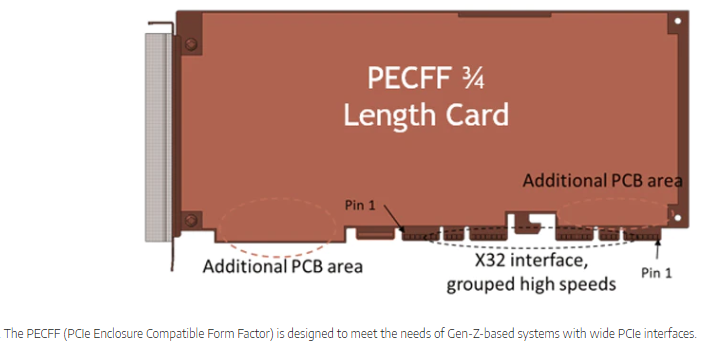

PCIe Enclosure Compatible Form Factor, or PECFF, is a mechanical form factor developed by Gen-Z members to reduce solution cost and complexity. Some newer servers are now using PECFF and the related internal, twin-axial Inter-Card SFF TA 1002 cabling.

This packaging system supports top and bottom-running planar cables, including Y breakout or fanout implementations. Additionally, hybrid, multi-speed-rate link cables are now supporting 400G, 800G, and 1.6T IO interfaces, as well as the various accelerator types. (Figures 1 and 2.)

Current cabling and connector products have been keeping up with advancing technologies and requirements, supporting very high performance. This includes forward error correction (FEC), 112G per-lane signaling, with high-performance thermal and electrical signal integrity.

Developers are currently working on very short 212/224G per-lane cable assemblies, such as the next-generation GenZ Memory Fabric 16 lane internal cable links.

Although some developers are focused on non-GenZ standard alternative connector types, such as the SFF-1016.

In certain application-specific designs, the bottom-of-the-cards links can be used if the internal cable connectors are attached to the baseboard. To ensure airflow (and safety) in a thermal-circuit system, developers will often choose internal, bottom-mounted, high-temperature-rated, twin-axial cables. This is instead of running signaling across a long reach or a limited PCB baseboard (Figure 3).

New trends

There are additional types of internal, twin-axial cabling applications. For example, now some connect the backend of a half-sized blade or module with a cable link that connects to a baseboard (especially near a switch chip).

Other new market segments and applications are developing a MoR intra-PoD switch for leaf devices using non-pluggable types — such as Molex’s 2-piece IMPEL connector, which is used for the OPEN-19 link specification. These cable types typically do not use paddleboard plugs and offer embedded EPROMs (erasable programmable read-only memory) and memory-map functionality.

Copper-cabling suppliers are also focused on new, internal copper-cabled back, mid, and base-plane businesses for advanced memory, server, and storage arrays. For instance, the SFF-1002 internal cabling system is likely to soon offer a hybrid copper and optical interconnect system for 100 and 200G per lane.

AOCs (active optical cables) are increasingly selected for required link lengths of between five and 10 meters, or longer. Most R&D investment is now focused on internal copper cabling products instead of DACs.

Several optical system equipment providers are using competing interconnects, such as COBO, on board optical devices. They’re connecting internal ribbon optical cables with passive optical faceplate port connectors. These links are competing for various, design-in opportunities at 100 and 200G rates, or higher speeds.

It’s worth reviewing the latest SFF-1020 for the various SFF-TA-1002 connector and cabling specifications.

Leave a Reply

You must be logged in to post a comment.