The world of gigabits began some 25 years ago, with a progression of interface lanes and links — from 100G and 200G to 400G and then 800G using IO standard and proprietary connectors and cables. Believe it or not, gigabit lanes were first used in 1999.

Today, we’re entering the terabit phase with interface links using 1.6 and 3.2T products, with 6.4+T in R&D. This year might officially mark the terabit world. The development of 212G and 224G per electrical lane IO connectors and cables are pushing the drive for terabit link product options.

Several high-end accelerator and system interfaces are currently using the external and internal OSFP-XD connector, heatsink, cage, cable, and module families, which have 16 lanes. The 112G and even 224G per lane are also starting on early manufacturing lines.

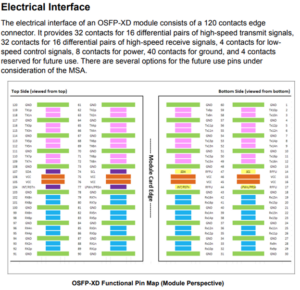

For example, OSFP-XD is a .6mm contact pitch with 32 differential pairs and 120 contacts in the connector, which itself is about one inch wide and three inches deep.

Here’s a look at the OSFP-XD’s pinout:

Currently, the 200G optical Single Lambda wavelengths can be combined into one fiber to form 10 wavelengths. As one example, it’s serving to support switch-radix 2.0T link applications in large data centers.

What’s more, the 400G optical Single Lambda wavelengths can also be combined with just four wavelengths to support 1.6T transmissions in one fiber. Expect new products soon that perform at 1.2T Single Lambda transmission.

The key to the successful development and use of the terabit class of link interconnect products will be innovative test instrumentation equipment that validates the design, performance, and compliance, as well as interoperability testing.

Here’s insight into what developer Keysight Technologies is working on…

Testing with thermal load modules and emulation software is a critical validation tool set. MultiLane is a global module supplier that validates new terabit links with 200G+ lanes.

MultiLane also offers thermal load module and controller devices with 16 x 112 Gbps measurements for up to 1.792T links. Here are a few MultiLane’s new 16-lane OSFP-XD test module product solutions…

MultiLane also offers thermal load module and controller devices with 16 x 112 Gbps measurements for up to 1.792T links. Here are a few MultiLane’s new 16-lane OSFP-XD test module product solutions…

Containing 16 power spots, each dissipating 4W, the ML4064-XD-TL can be configured for a maximum of 44W. The extra power spots offer significant flexibility for early adopters looking to validate potential OSFP-XD thermal designs. Read the full specs here.

One upcoming form factor that seems poised to claim a significant amount of the market is OSFP-XD at 16 x 112 Gbps. MultiLane’s OSFP-XD ML4064-XD-TL thermal load provides a versatile solution for thermal emulation.

Active electrical copper (AEC) cable link products are likely to represent a higher percentage of the links used for 200+G per lane shipments than passive electrical copper types. In fact, AECs have seen a market percentage growth for 100G+ applications.

But are AOCs capable of gaining the hyperscaler data-center market share for 200G+ per lane applications? Will the new OBO, CPO, and Chiplet module optical connectors and cables increase in demand this year?

Credo offers 1.6T AEC cable assembly products using OSFP-XD 16-lane connectors. The company develops its own chips that are embedded in cable plugs. It seems they’re also working on the next-generation 3.2T products.

This is Credo’s recent HiWire announcement:

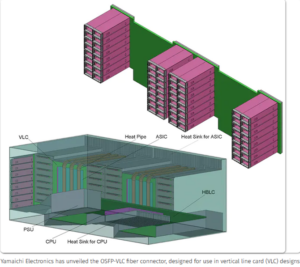

Yamaichi has released a new fiber connector product family with enhanced porting options for high-end switch equipment with many OSFP connector ports. The new OSFP-VLC connector is designed for vertical line card connectivity.

The vertical OSFP and OSFP-XD connector can potentially improve the budgets for terabit links, optimizing the reach. An OSFP-XD-VLC connector typically means better-performing PCB or cabled backplanes that support several form factors. The OSFP-VLC is also compatible with Nubis Communications’ new VLC platform. The solution can likely also support many terabit link switch ports.

Interestingly, Nokia and Vodafone Turkey recently announced the development of an optical 1-terabit clear channel IP link between Asia and Europe. A successful trial involved Nokia’s 7950 XRS router using the company’s FP4 Chipset with dual-terabit IP interfaces.

Intel demonstrated that a 32-lane X 106G PAM4 equals a 3.4T chip and link. It might have the bandwidth to support a 32-lane X 112G to equal a 3.7T link or port. The OIF-CEI-112G XSR specification was used as the electrical interface basis for this chip. It can also support a 32-lane X 53G PAM4 to equal 1.7T ports and links.

It’s possible to envision a dual OSFP LVC connector and cable solution set for this terabit application.

Looking forward

It’s important that developers design or source products that can handle the hotter 90° F ambient data-center temperature now required by companies such as Amazon, Google, Microsoft, and others.

Ideally, a careful product lifecycle re-analysis should be done to ensure the best time-to-failure results for different components and subassemblies. This means using twin-ax cables with plenty of margins for a 3, 5, or 10-year product life.

Additionally, it’s important to closely track the OIF-CEI-224G specifications, IEEE 802.3df, newer IEEE 802.3 working groups, OSFP-XD MSA, and other industry groups. Pluggable users should be aware that QSFP-DD, OSFP, and OSFP-XD receptacles are typically more aerodynamic to maximize the air and heat flow compared to older QSFP receptacles.

The QSFP-DD 8-lane connectors are slightly smaller and better optimized but have a less competitive thermal heat flow than OSFP 8-lane connectors, heatsinks, cages, and cables.

The development of terabit link standards and specifications seems to be going well, but there’s a need for a higher level of cooperation among developers and global industry leaders. There are fewer suppliers that can measure, design, and produce pluggable connectors, cables, and modules running at 100+ and 200+G per lane — while meeting time-to-market, interoperability, and high-volume ramp-up. Every year, the detailed process and product development costs seem to grow.

It’s likely the hyperscaler market segment will rely on more internal and external optical links instead of copper at 200+G per lane. Although the enterprise market segment is likely to use copper short-reach links with more AECs.

It’s also likely the beginning of the Petabit optical link development is here. Fujikura and the Unversity of Copenhagen have announced their new optical chip with transmission running at 1.84Pbits over a 37-core 7.9-km link using 223 wavelength channels.

Looking back

About 25 years ago, the IO interface world was transitioning from megabit lanes and/or links to the world of gigabits per lane. There was also a major shift at that time from using parallel IO interfaces to serial IO ones.

For example, we’ve gone from using 800Mbts HIPPI IO interfaces with TE Amplimite’s 3.5 inches wide X 0.75 inches deep, 100 positions, .5mm pitch connectors, and 50 differential pairs cable to using SerDes 10Gbps technology and QSFP connectors that are about 0.75 inches wide and 3.5 inches deep.

Afterward, the SuperHIPPI interface operated at 50G per second per Link. Early NumaLink IO interfaces used the Meritec GNDPlane type of a modified MDR 50 differential pair twin-axial connector and cable. These had similar footprints in PCB square-inch size but better port density and functionality.

The QSFP paddleboard and cable receptacle connectors enabled that product lifecycle for many years. Looking at the images below, it seems these older interface connectors were about the same height as the newer pluggable QSFP type.

Here are the HIPPI and NumaLink 50TP copper wire pairs…

Here’s the former SGI Altrix’s NumaLink 2.0, 3.0 parallel IO interface that also used 50TP or 50Twinax cable with 100P connector 3.5 inces wide (made by Meritec)…

The above photo shows the strong latch, which was necessary for some applications such as those inside early vehicles like Humvees.

So far, 2023 seems set to offer several internal terabit cable assemblies, both in custom and standard options (like the SFF-TA-1002 and – 1020 connectors). It’ll be worth attending the upcoming DesignCon 2023 event to check out the latest advancements in terabit Link components, assemblies, devices products, and new applications.

Leave a Reply

You must be logged in to post a comment.